Originality Ratings

Intro

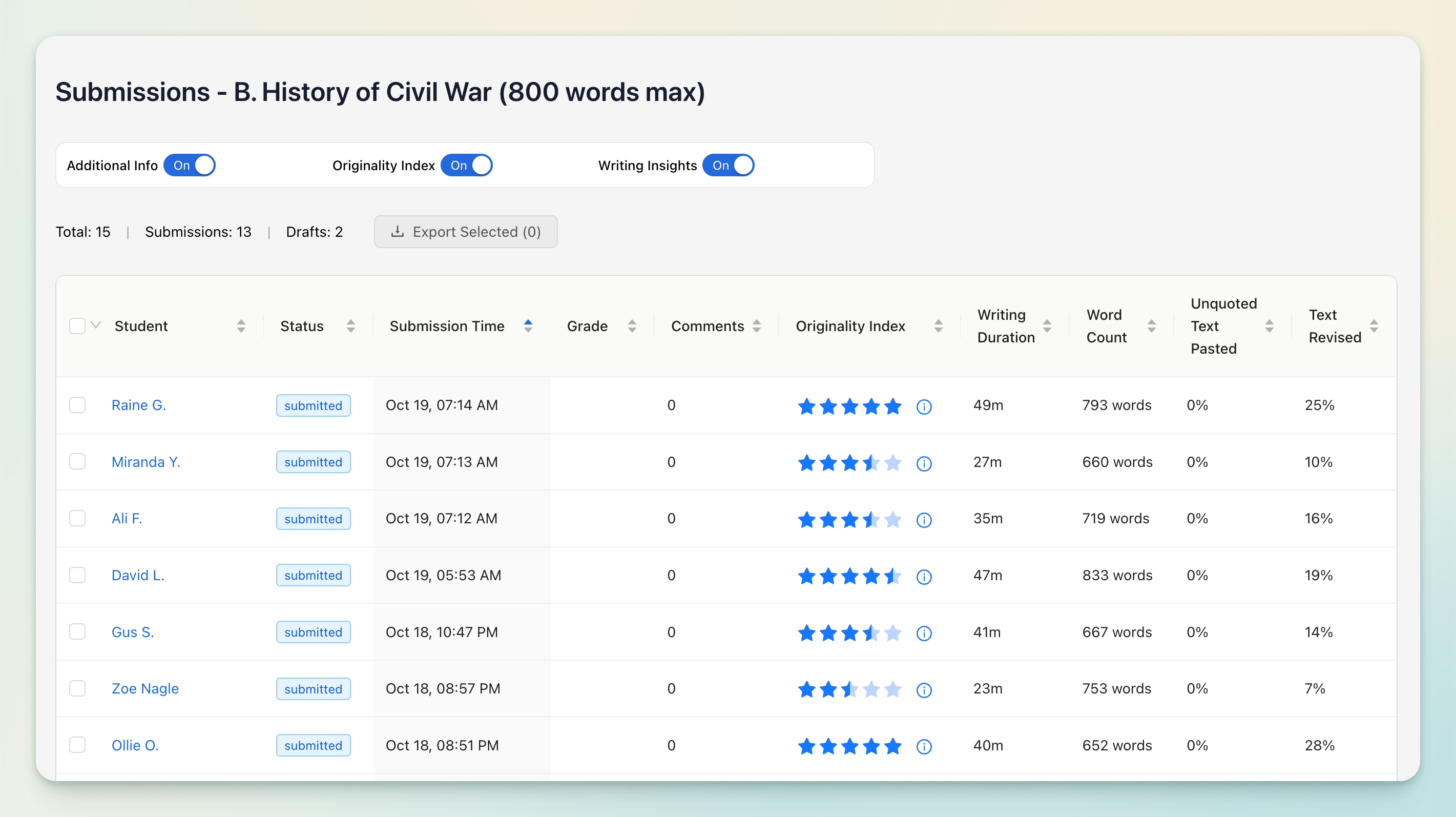

The Rumi Submission Dashboard gives instructors and administrators a layered way to evaluate student originality and effort.

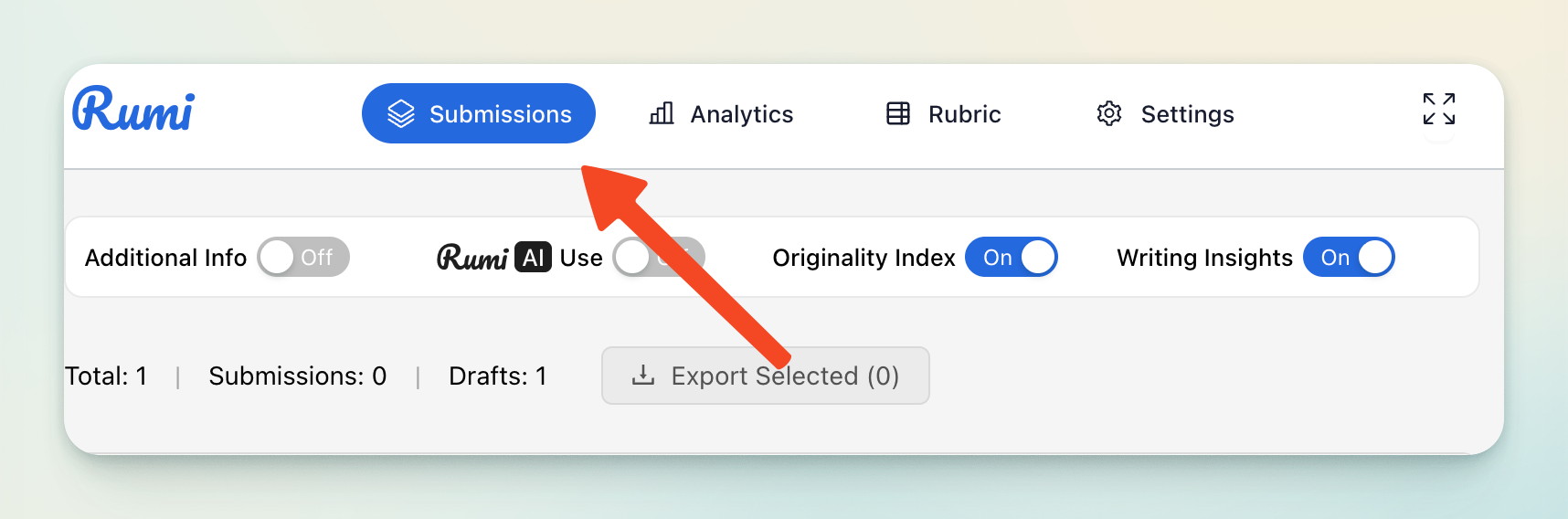

How to Access

To access the submissions dashboard, click on the "Submissions" button on top.

Key Data

The dashboard surfaces a wide range of data, including:

- Essays submitted and in draft

- Time of submission

- Number of comments

- Number of AI prompts used

- Originality Index (five-star rating)

- Metrics

- Writing Duration (minutes) – measures time spent actively typing. If no typing occurs for 15 seconds, the timer pauses and resumes once typing continues.

- Word Count – the total final word count of the essay.

- Unquoted Pasted Text (%) – the percentage of unquoted text pasted from external sources.

- Text Revised (%) – the proportion of words changed or edited during the drafting process.

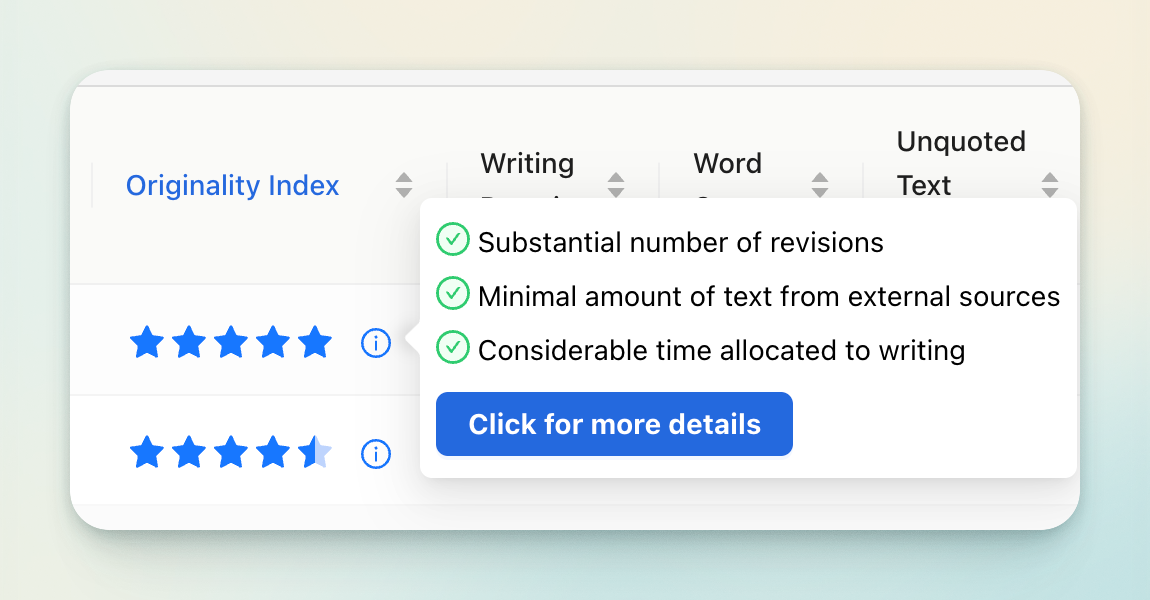

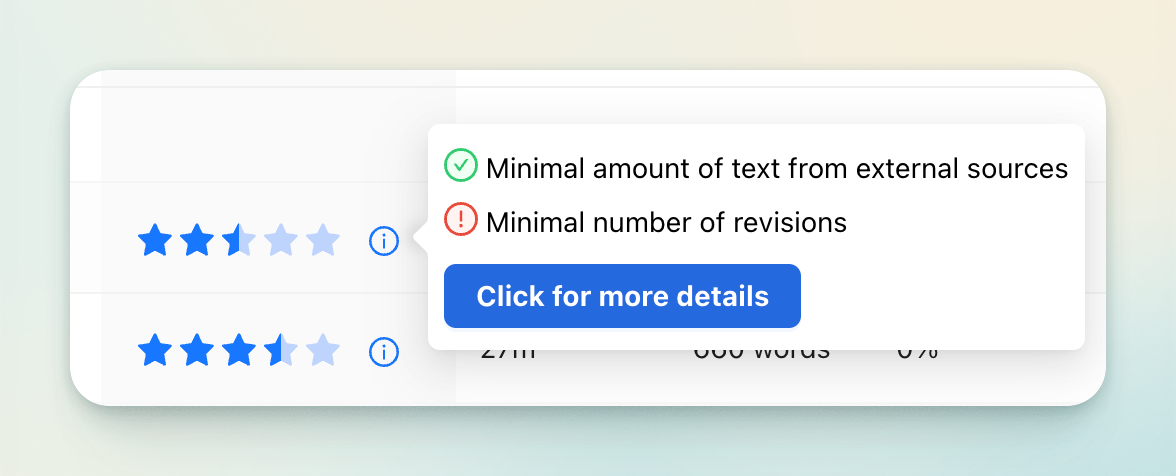

Understanding the 5-Star Originality Rating

The Rumi Originality Index condenses multiple analytics into a single 5-star rating for quick reference:

- 1 star → suggests low originality

- 5 stars → suggests high originality

However, the star rating should be used as a starting point, not a final judgment. Instructors are encouraged to follow a three-step process:

(1) Check the 5-star rating → (2) Explore essay analytics → (3) Review revision history.

This layered approach ensures instructors can dig deeper when needed and avoid misinterpreting the score.

By combining the star rating, analytics deep dive, and revision history, instructors can distinguish between genuine student effort and cases where the data may be misleading.

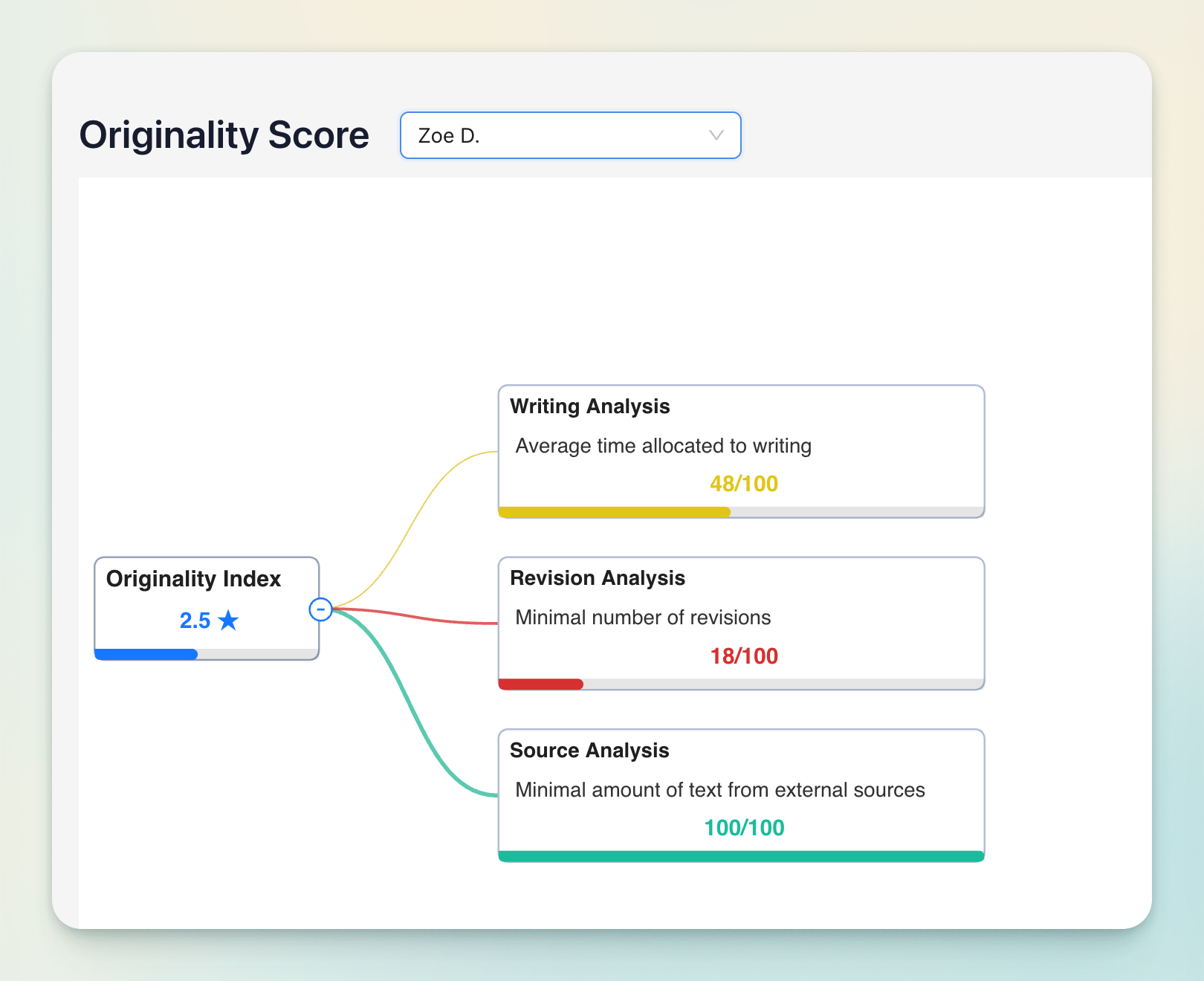

Analytics Deep Dive

The dashboard breaks originality into three non-overlapping analytic categories—so effort is never double-counted:

- Writing Analysis – Measures time spent actively typing relative to word count, excluding pasted text.

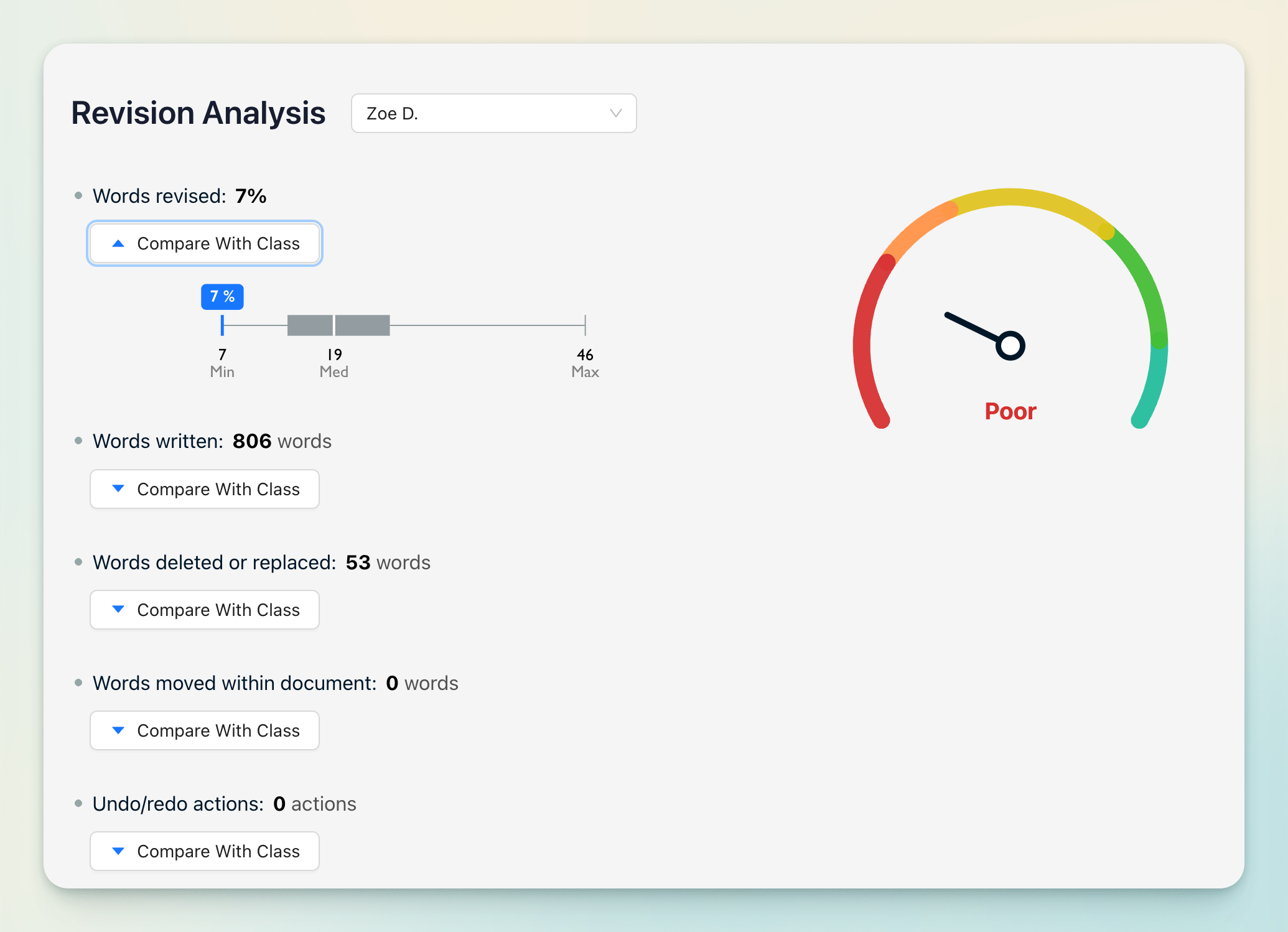

Example: A student submits a 200-word essay in 10 minutes, with 180 words pasted from external sources. Writing Analysis only applies the 10 minutes to the 20 words typed in Rumi. This avoids inflating the metric and shows that, for the text the student did compose themselves, they spent considerable effort per word. - Revision Analysis – Tracks what percentage of the essay was revised. High revision suggests iterative drafting; low revision may reflect minimal editing.

- Source Analysis – Shows what proportion of text was pasted versus originally written. This helps separate original contribution from external reliance.

Cohort Analysis

To provide context, each analytic metric in the dashboard can be viewed at the cohort level using box plots.

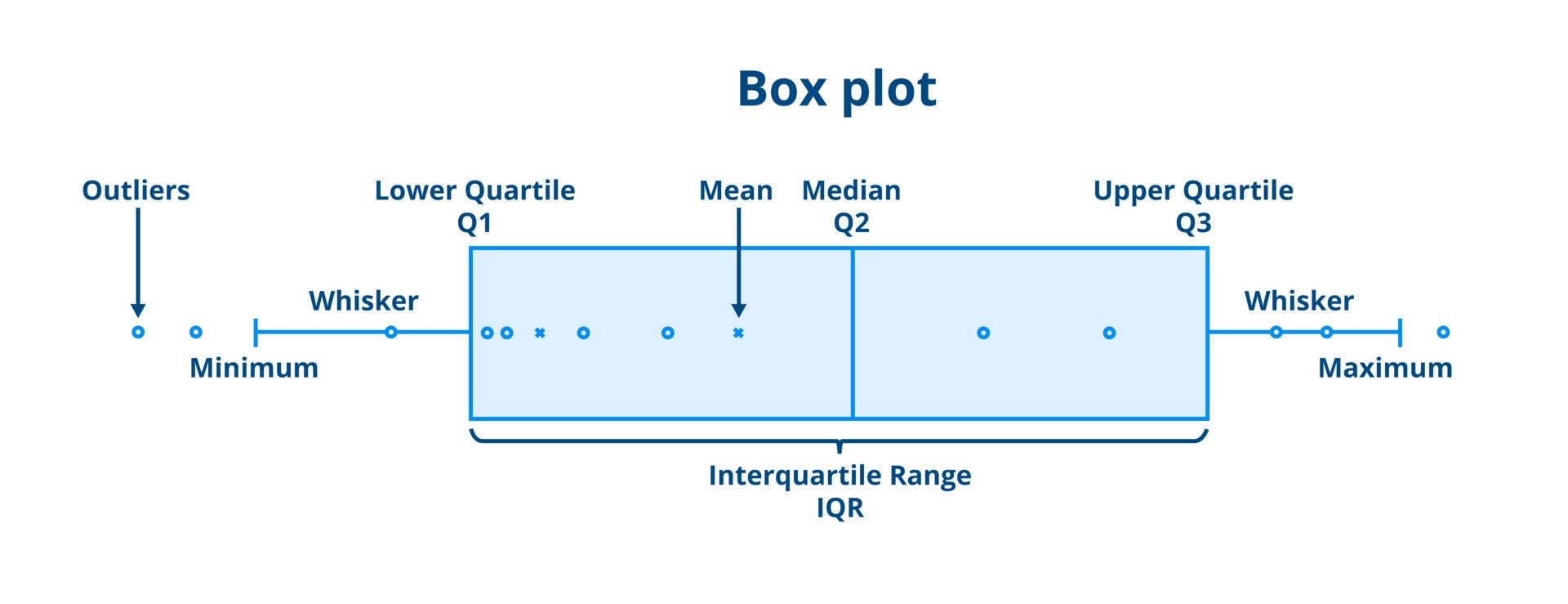

What is a Box plot

A box plot summarizes the spread of data across a group and highlights how an individual compares to peers. It shows:

- The median (50th percentile) – the middle value of the dataset.

- The 25th percentile (Q1) – the point where 25% of students scored below, and 75% scored above.

- The 75th percentile (Q3) – the point where 75% of students scored below, and 25% scored above.

- The box – the shaded area between Q1 and Q3, showing the middle 50% of results.

- The whiskers – the typical range of values outside the box.

- Outliers – unusual data points that fall far outside the expected range.

Example: In Writing Duration, the box plot may show most students typed between 20–30 minutes (Q1 = 20, Q3 = 30, median = 25). A student who typed for 2 minutes would appear well below the 25th percentile, while one who typed for 2 hours would appear as a high outlier.

This makes it easy for instructors to spot whether a student’s behavior is within the normal range, below expectations, or an outlier that warrants closer review.

For example, in Writing Duration, most students may fall between 20–30 minutes. A student who typed for 2 minutes or one who typed for 2 hours would show as outliers—helping instructors spot students who may need extra support or additional review.

Real-World Examples: Why Context Matters

Example 1: Why a 1-Star Essay May Still Be Original

A student submits an essay and receives 1 star. At first glance, this may suggest heavy copy-pasting or low originality.

In reality, this could be the first time the student was asked to use Rumi and they forgot to compose their essay in Rumi. Instead, they may have written the essay originally in Microsoft Word or Google Docs and then copy-pasted it into Rumi for submission.

Because Rumi only measures originality within its environment, the pasted text triggers a low rating even though the student did the work themselves.

In this case, the analytics are misleading unless the instructor reviews the revision history, which may show almost no typing activity but a large paste event.

Takeaway: A 1-star essay is not always plagiarism or low effort — it may be a workflow issue.

Example 2: Why a 5-Star Essay May Not Be Genuine Work

Another student submits an essay and receives 5 stars, which suggests strong originality.

But when reviewing the revision history, the instructor sees the student repeatedly added and deleted random words to artificially increase typing time and percentage of revisions.

This behavior can trick the metrics into suggesting "high originality," even though the student may have been copying from another source or simply trying to "game" the system.

Takeaway: A 5-star rating does not always guarantee thoughtful work. It is important to check whether edits are substantive improvements (e.g., refining arguments, improving structure) or mechanical manipulations (e.g., meaningless word changes).

Example 3: Why 2.5 Stars Can Mean Opposite Things

Two students both receive a 2.5-star rating for two different assignments, but their writing processes reveal completely different scenarios:

Student A (Rough Draft Assignment): The class was asked to write a rough draft, encouraging students to get their initial thoughts down without worrying about perfection. This student writes quickly, putting down the first things that come to mind without making many edits. The essay shows authentic voice and original thinking, but the low revision percentage and relatively quick writing time result in a 2.5-star rating. When compared to the class cohort for this rough draft assignment, this student's metrics align perfectly with the assignment expectations — genuine original work that appropriately hasn't been polished yet.

Student B (Final Essay Assignment): For a different assignment requiring a polished final essay, another student submits a highly sophisticated, perfectly structured document written in one continuous session with minimal revisions. Despite the assignment calling for a refined piece that typically requires multiple drafts, this student shows no typical drafting patterns. When compared to their classmates who all show iterative improvement and multiple revision cycles for this final essay assignment, this student is a clear outlier. The "too perfect" submission with a 2.5-star rating suggests the content may have been written elsewhere or by someone else.

Takeaway: Context is everything. A 2.5-star rating on a rough draft assignment, where minimal revision is expected, likely represents authentic work. The same 2.5-star rating for a final essay assignment where extensive revision is the norm could be a red flag. Always compare metrics with the specific class cohort and consider the assignment type before making judgments.

Recommendation

We recommend using a three-step approach:

- Start with the 5-star Originality Index for a quick, sortable overview of submissions.

- Drill into essay analytics (writing, revision, and source metrics) for more details and compare with class metrics (cohort analysis) if needed.

- Review the revision history for a complete picture of the student’s process.

This workflow ensures instructors can move from a high-level summary to detailed evidence as needed, without double-counting or overlooking key context.